Keywords: beta-binomial | hierarchical | pymc3 | posterior predictive | Download Notebook

Contents

%matplotlib inline

import numpy as np

from scipy import stats

from scipy.stats import norm

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style('white')

sns.set_context('paper')

import pandas as pd

import pymc3 as pm

from pymc3 import Model, Normal, HalfNormal

import time

Tumors in rats

Let us try to do full Bayesian inference with PyMC3 for the rat tumor example that we have solved using Gibbs sampling in a previous lab. Remember that the goal is to estimate $\theta$, the probability of developing a tumor in a population of female rats that have not received treatement. Data of a particular experiment shows that 4 out of 14 rats develop the tumor. But we also have historical literature data for other 70 experiments, which give estimates $\theta_i$.

For convenience, we adopt a prior for $\theta_i$ from the conjugate Beta family: $\theta_i \sim Beta(\alpha, \beta)$. If we had an expert telling us the values for $\alpha$ and $\beta$, we could just calculate the posterior using the conjugate rules. But we do not usually have that. We have, instead, the historical data, that we can use to perform inference for $\theta_i$, the tumor probability in the current experiment

It is natural the model the number $y_i$ of tumors for each experiment performed on a total of $n_i$ rats as a binomial:

\[p(y_i \vert \theta_i; n_i) = Binom(n_i, y_i, \theta_i)\]We can now write a joint posterior distribution for the $\theta$s, $\alpha$ and $\beta$, assuming partial pooling (i.e., hierarchical Bayes), where the $\theta_i$ is assumed to be different for each experiment, but all drawn from the same Beta distribution with parameteres $\alpha$ and $\beta$:

\(p( \theta_i, \alpha, \beta \vert y_i, n_i) \propto p(\alpha, \beta) \, p(\theta_i \vert \alpha, \beta) \, p(y_i \vert \theta_i, n_i,\alpha, \beta)\) or for the whole dataset: \(p( \Theta, \alpha, \beta \vert Y, \{n_i\}) \propto p(\alpha, \beta) \prod_{i=1}^{70} Beta(\theta_i, \alpha, \beta) \prod_{i=1}^{70} Binom(n_i, y_i, \theta_i)\)

So we only need to figure out the prior for the hyperparameters: $p(\alpha,\beta)$. We have shown that it is convenient to use uniform priors on the alernative variables $\mu$ (the mean of the beta distribution) and $\nu$:

\(\mu = \frac{\alpha}{\alpha+\beta}\) \(\nu = (\alpha+\beta)^{-1/2}\)

which yiels a prior for $\alpha$ and $\beta$ of the form:

\[p(\alpha,\beta) \sim (\alpha+\beta)^{-5/2}\]Let us firs load the data:

tumordata="""0 20

0 20

0 20

0 20

0 20

0 20

0 20

0 19

0 19

0 19

0 19

0 18

0 18

0 17

1 20

1 20

1 20

1 20

1 19

1 19

1 18

1 18

3 27

2 25

2 24

2 23

2 20

2 20

2 20

2 20

2 20

2 20

1 10

5 49

2 19

5 46

2 17

7 49

7 47

3 20

3 20

2 13

9 48

10 50

4 20

4 20

4 20

4 20

4 20

4 20

4 20

10 48

4 19

4 19

4 19

5 22

11 46

12 49

5 20

5 20

6 23

5 19

6 22

6 20

6 20

6 20

16 52

15 46

15 47

9 24

"""

And now let us create two arrays, one for the observed tumors and one for the total number of rats in each of the 70 experiments.

tumortuples=[e.strip().split() for e in tumordata.split("\n")]

tumory=np.array([np.int(e[0].strip()) for e in tumortuples if len(e) > 0])

tumorn=np.array([np.int(e[1].strip()) for e in tumortuples if len(e) > 0])

print(tumory, tumorn)

print(np.shape(tumory))

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 3 2 2

2 2 2 2 2 2 2 1 5 2 5 2 7 7 3 3 2 9 10 4 4 4 4 4 4

4 10 4 4 4 5 11 12 5 5 6 5 6 6 6 6 16 15 15 9] [20 20 20 20 20 20 20 19 19 19 19 18 18 17 20 20 20 20 19 19 18 18 27 25 24

23 20 20 20 20 20 20 10 49 19 46 17 49 47 20 20 13 48 50 20 20 20 20 20 20

20 48 19 19 19 22 46 49 20 20 23 19 22 20 20 20 52 46 47 24]

(70,)

Just to have some intuition let us get the naive probabilities ($y_i/n_i$) of developing a tumor for each of the 70 experiments, and let’s print the mean and the standard deviation:

tumor_rat = [float(e[0])/float(e[1]) for e in zip(tumory, tumorn)]

#print (tumor_rat)

tmean = np.mean(tumor_rat)

tvar = np.var(tumor_rat)

print(tmean, tvar)

0.13600653889 0.0105576406236

We now write the model in PyMC3. PyMC3 will by default use the NUTS algorithm, unless told otherwise. Once the step method has been chosen, PyMC3 will also optimize for the parameters of the method (step sizes, proposal distributions, scaling, starting values for the parameters, etc), but we can also manually set those. Here we use both NUTS and Metropolis to perform the sampling. First let us load the relevant probability distributions

Setting up the PyMC3 model

Now let us set up the model. Note the simplification with respect to the Gibbs sampler we have used earlier. Because PyMC3 takes care of refining the parameters of the selected step method, or uses gradient-based methods for the sampling, it does not require us to specify the conditionals distributions for $\alpha$ and $\beta$. We only need to specify the priors for $\mu$ and $\nu$, and then write expressions for $\alpha$ and $\beta$ as a function of $\mu$ and $\nu$. Note that we use the pm.Deterministic function to define $\alpha$ and $\beta$ and give them proper names. Without a name, these variables will not be included in the trace.

# pymc3

from pymc3 import Uniform, Normal, HalfNormal, HalfCauchy, Binomial, Beta, sample, Model # Import relevant distributions

N = tumorn.shape[0]

with Model() as tumor_model:

# Uniform priors on the mean and variance of the Beta distributions

mu = Uniform("mu",0.00001,1.)

nu = Uniform("nu",0.00001,1.)

#nu = HalfCauchy("nu", beta = 1.)

# Calculate hyperparameters alpha and beta as a function of mu and nu

alpha = pm.Deterministic('alpha', mu/(nu*nu))

beta = pm.Deterministic('beta', (1.-mu)/(nu*nu))

# Priors for each theta

thetas = Beta('theta', alpha, beta, shape=N)

# Data likelihood

obs_deaths = Binomial('obs_deaths', n=tumorn, p=thetas, observed=tumory)

from pymc3 import find_MAP

with tumor_model:

# instantiate sampler

step = pm.Metropolis()

# draw 2000 posterior samples

tumor_trace = pm.sample(200000, step=step)

Multiprocess sampling (2 chains in 2 jobs)

CompoundStep

>Metropolis: [theta]

>Metropolis: [nu]

>Metropolis: [mu]

Sampling 2 chains: 100%|██████████| 401000/401000 [04:26<00:00, 1505.99draws/s]

The gelman-rubin statistic is larger than 1.2 for some parameters.

The estimated number of effective samples is smaller than 200 for some parameters.

mtrace = tumor_trace[100000::25]

pm.summary(mtrace)

| mean | sd | mc_error | hpd_2.5 | hpd_97.5 | n_eff | Rhat | |

|---|---|---|---|---|---|---|---|

| mu | 5.000669e-01 | 6.287878e-05 | 0.000006 | 4.999661e-01 | 5.001860e-01 | 3.234569 | 1.360175 |

| nu | 3.611176e-04 | 8.753924e-05 | 0.000008 | 2.014466e-04 | 5.066631e-04 | 1.942442 | 1.652039 |

| alpha | 4.727586e+06 | 2.801670e+06 | 261826.826631 | 1.566256e+06 | 1.042954e+07 | 2.390785 | 1.526307 |

| beta | 4.726268e+06 | 2.800994e+06 | 261764.660399 | 1.566473e+06 | 1.042653e+07 | 2.392376 | 1.525924 |

| theta__0 | 5.000391e-01 | 1.800322e-04 | 0.000018 | 4.997121e-01 | 5.003853e-01 | 9.831093 | 0.999910 |

| theta__1 | 5.001020e-01 | 1.329097e-04 | 0.000013 | 4.998569e-01 | 5.003674e-01 | 26.436879 | 1.099340 |

| theta__2 | 5.001533e-01 | 1.659936e-04 | 0.000016 | 4.998351e-01 | 5.004404e-01 | 12.091202 | 1.007664 |

| theta__3 | 4.999507e-01 | 2.331874e-04 | 0.000023 | 4.994840e-01 | 5.003726e-01 | 3.010059 | 1.402556 |

| theta__4 | 5.002441e-01 | 1.948635e-04 | 0.000019 | 4.999769e-01 | 5.006585e-01 | 7.904370 | 1.194238 |

| theta__5 | 5.000091e-01 | 2.041813e-04 | 0.000020 | 4.996871e-01 | 5.003607e-01 | 3.004450 | 1.384933 |

| theta__6 | 4.999200e-01 | 1.604717e-04 | 0.000015 | 4.996485e-01 | 5.002131e-01 | 2.712364 | 1.401897 |

| theta__7 | 5.001111e-01 | 2.240313e-04 | 0.000022 | 4.995906e-01 | 5.004315e-01 | 5.876380 | 1.179545 |

| theta__8 | 5.001553e-01 | 1.604234e-04 | 0.000015 | 4.999116e-01 | 5.005172e-01 | 11.259525 | 1.049591 |

| theta__9 | 5.000615e-01 | 1.719356e-04 | 0.000017 | 4.996643e-01 | 5.003404e-01 | 10.994698 | 1.065421 |

| theta__10 | 5.000502e-01 | 1.883012e-04 | 0.000018 | 4.997173e-01 | 5.004508e-01 | 19.463268 | 1.001215 |

| theta__11 | 5.000578e-01 | 1.575548e-04 | 0.000015 | 4.997069e-01 | 5.003717e-01 | 11.026666 | 1.058203 |

| theta__12 | 5.000756e-01 | 1.698583e-04 | 0.000016 | 4.997752e-01 | 5.003683e-01 | 3.960245 | 1.319004 |

| theta__13 | 5.000070e-01 | 1.786940e-04 | 0.000017 | 4.996324e-01 | 5.003333e-01 | 7.675879 | 1.120249 |

| theta__14 | 5.001392e-01 | 2.366688e-04 | 0.000023 | 4.997431e-01 | 5.005740e-01 | 6.399215 | 1.087414 |

| theta__15 | 4.999389e-01 | 1.429021e-04 | 0.000014 | 4.996461e-01 | 5.002203e-01 | 10.493800 | 1.191283 |

| theta__16 | 5.000264e-01 | 1.527704e-04 | 0.000015 | 4.997020e-01 | 5.002922e-01 | 8.520209 | 1.152993 |

| theta__17 | 4.999154e-01 | 1.854030e-04 | 0.000018 | 4.995322e-01 | 5.002151e-01 | 3.399206 | 1.321350 |

| theta__18 | 5.000417e-01 | 1.937594e-04 | 0.000019 | 4.996465e-01 | 5.004034e-01 | 10.537999 | 1.006096 |

| theta__19 | 5.001232e-01 | 1.455825e-04 | 0.000014 | 4.998634e-01 | 5.004082e-01 | 7.147684 | 1.126504 |

| theta__20 | 5.000995e-01 | 1.986575e-04 | 0.000019 | 4.996207e-01 | 5.004306e-01 | 8.625162 | 1.128328 |

| theta__21 | 5.001336e-01 | 1.439817e-04 | 0.000014 | 4.998322e-01 | 5.003975e-01 | 17.871090 | 1.044630 |

| theta__22 | 5.000656e-01 | 1.165241e-04 | 0.000011 | 4.998488e-01 | 5.002999e-01 | 29.477362 | 1.066967 |

| theta__23 | 5.000234e-01 | 1.151251e-04 | 0.000011 | 4.998260e-01 | 5.002795e-01 | 29.281858 | 1.000100 |

| theta__24 | 5.001275e-01 | 1.492349e-04 | 0.000014 | 4.998598e-01 | 5.004422e-01 | 18.561020 | 1.000380 |

| theta__25 | 5.000606e-01 | 2.124652e-04 | 0.000021 | 4.997016e-01 | 5.005374e-01 | 6.958177 | 1.007100 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| theta__40 | 5.001775e-01 | 1.871297e-04 | 0.000018 | 4.998663e-01 | 5.005601e-01 | 15.097984 | 1.000517 |

| theta__41 | 5.000726e-01 | 2.399461e-04 | 0.000024 | 4.994396e-01 | 5.004034e-01 | 5.370408 | 1.161731 |

| theta__42 | 5.000981e-01 | 1.682222e-04 | 0.000016 | 4.997105e-01 | 5.003691e-01 | 9.326048 | 1.066904 |

| theta__43 | 4.999549e-01 | 2.211898e-04 | 0.000022 | 4.995577e-01 | 5.003209e-01 | 2.878013 | 1.395574 |

| theta__44 | 5.000656e-01 | 1.583165e-04 | 0.000015 | 4.998096e-01 | 5.003818e-01 | 16.496075 | 1.002571 |

| theta__45 | 4.999942e-01 | 1.608783e-04 | 0.000016 | 4.996740e-01 | 5.002416e-01 | 13.352827 | 1.106972 |

| theta__46 | 5.001250e-01 | 1.663445e-04 | 0.000016 | 4.998137e-01 | 5.005003e-01 | 19.084825 | 1.051638 |

| theta__47 | 5.000473e-01 | 1.914011e-04 | 0.000019 | 4.996274e-01 | 5.003780e-01 | 9.577494 | 1.078678 |

| theta__48 | 5.000222e-01 | 1.519040e-04 | 0.000015 | 4.996622e-01 | 5.003131e-01 | 17.045429 | 1.075165 |

| theta__49 | 4.999924e-01 | 1.781156e-04 | 0.000017 | 4.996150e-01 | 5.003196e-01 | 9.367578 | 1.013653 |

| theta__50 | 5.001496e-01 | 1.675654e-04 | 0.000016 | 4.998011e-01 | 5.004607e-01 | 19.557957 | 1.006750 |

| theta__51 | 5.001616e-01 | 2.013671e-04 | 0.000020 | 4.998633e-01 | 5.006041e-01 | 5.466876 | 1.193948 |

| theta__52 | 5.001461e-01 | 1.623611e-04 | 0.000016 | 4.998244e-01 | 5.005076e-01 | 9.804783 | 1.007959 |

| theta__53 | 5.000805e-01 | 1.783615e-04 | 0.000017 | 4.997702e-01 | 5.004180e-01 | 8.014413 | 1.182770 |

| theta__54 | 5.000096e-01 | 1.369743e-04 | 0.000013 | 4.997913e-01 | 5.002760e-01 | 9.935164 | 1.032204 |

| theta__55 | 5.001198e-01 | 1.293484e-04 | 0.000012 | 4.998772e-01 | 5.003745e-01 | 22.275240 | 1.002406 |

| theta__56 | 5.000050e-01 | 1.478372e-04 | 0.000014 | 4.996954e-01 | 5.002809e-01 | 19.140730 | 1.019614 |

| theta__57 | 5.000829e-01 | 1.775987e-04 | 0.000017 | 4.997650e-01 | 5.004582e-01 | 5.644519 | 1.155416 |

| theta__58 | 5.000725e-01 | 1.508687e-04 | 0.000014 | 4.997883e-01 | 5.003515e-01 | 7.784004 | 1.199499 |

| theta__59 | 5.000540e-01 | 1.414569e-04 | 0.000013 | 4.997392e-01 | 5.002932e-01 | 5.673277 | 1.243000 |

| theta__60 | 4.999827e-01 | 1.757847e-04 | 0.000017 | 4.995909e-01 | 5.002680e-01 | 3.259067 | 1.349769 |

| theta__61 | 5.000376e-01 | 1.778730e-04 | 0.000017 | 4.996996e-01 | 5.003708e-01 | 13.820166 | 1.012429 |

| theta__62 | 5.000823e-01 | 1.784750e-04 | 0.000017 | 4.997655e-01 | 5.004359e-01 | 11.812727 | 1.032088 |

| theta__63 | 4.999897e-01 | 2.581503e-04 | 0.000025 | 4.994230e-01 | 5.004484e-01 | 2.906859 | 1.420999 |

| theta__64 | 5.000612e-01 | 1.383971e-04 | 0.000013 | 4.998412e-01 | 5.003580e-01 | 15.026356 | 1.002756 |

| theta__65 | 5.000979e-01 | 2.324506e-04 | 0.000023 | 4.997207e-01 | 5.006178e-01 | 2.778490 | 1.432332 |

| theta__66 | 5.001369e-01 | 1.670882e-04 | 0.000016 | 4.998011e-01 | 5.004797e-01 | 8.248015 | 1.008637 |

| theta__67 | 5.000645e-01 | 2.151833e-04 | 0.000021 | 4.996766e-01 | 5.005471e-01 | 3.908657 | 1.318731 |

| theta__68 | 5.000867e-01 | 1.333601e-04 | 0.000013 | 4.998251e-01 | 5.003215e-01 | 10.326565 | 1.044398 |

| theta__69 | 5.001163e-01 | 1.660164e-04 | 0.000016 | 4.997761e-01 | 5.004282e-01 | 6.906468 | 1.179012 |

74 rows × 7 columns

from pymc3 import traceplot

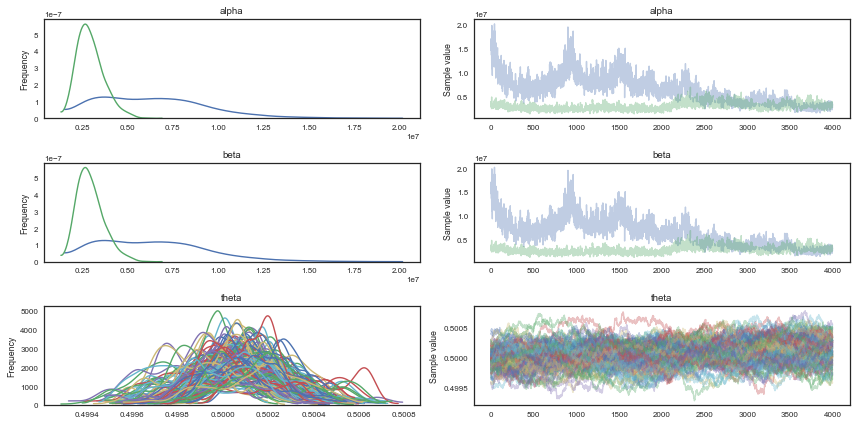

traceplot(mtrace, varnames=['alpha','beta','theta'])

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_base.py:3449: MatplotlibDeprecationWarning:

The `ymin` argument was deprecated in Matplotlib 3.0 and will be removed in 3.2. Use `bottom` instead.

alternative='`bottom`', obj_type='argument')

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x1086012e8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x184a15d68>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x184a3f518>,

<matplotlib.axes._subplots.AxesSubplot object at 0x1887e3ac8>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x12fe6db38>,

<matplotlib.axes._subplots.AxesSubplot object at 0x184a1e940>]], dtype=object)

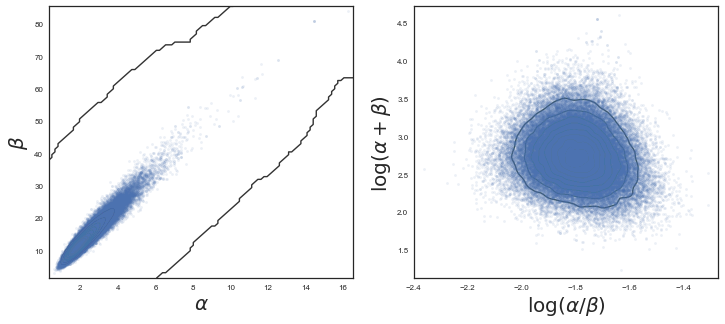

I have used the first half of the original samples for burnin. Note that we need many iterations, and a significant amount of thinning in order to make it converge and have uncorrelated samples. We plot the $\alpha$ and $\beta$ marginals and create a 2D histogram or KDE plot (sns.kdeplot in seaborn) of the marginal posterior density in the space $x = \alpha/\beta$, $y = log(\alpha + \beta)$. Further down we also look at the autocorrelation plots for $\alpha$, $\beta$, and $\theta_1$.

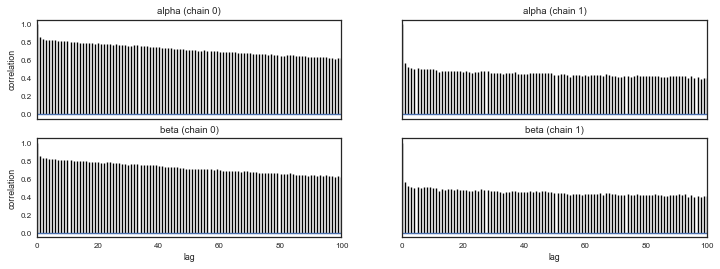

Autocorrelation

pm.autocorrplot(mtrace,varnames=['alpha','beta'])

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x11355b3c8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x17f2d8da0>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x180214080>,

<matplotlib.axes._subplots.AxesSubplot object at 0x181a0c9b0>]], dtype=object)

NUTS

Let’s try with NUTS now:

with tumor_model:

tumor_trace = pm.sample(40000)#, step, start=mu)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [theta, nu, mu]

Sampling 2 chains: 100%|██████████| 81000/81000 [03:33<00:00, 379.93draws/s]

The number of effective samples is smaller than 25% for some parameters.

Discussion on warmup and adaptation: https://andrewgelman.com/2017/12/15/burn-vs-warm-iterative-simulation-algorithms/

tt = tumor_trace[5000::]

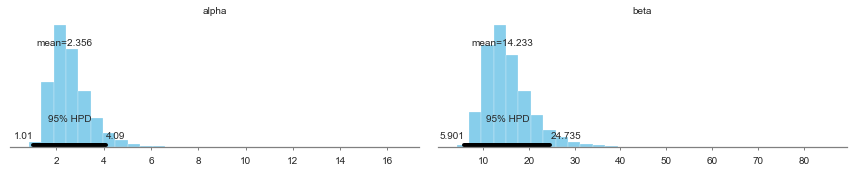

pm.summary(tt)

| mean | sd | mc_error | hpd_2.5 | hpd_97.5 | n_eff | Rhat | |

|---|---|---|---|---|---|---|---|

| mu | 0.142671 | 0.013482 | 0.000049 | 0.116257 | 0.169103 | 59109.621269 | 0.999987 |

| nu | 0.256804 | 0.043600 | 0.000395 | 0.173587 | 0.343456 | 14076.577169 | 1.000003 |

| alpha | 2.356234 | 0.885711 | 0.009062 | 1.009642 | 4.090366 | 11920.240119 | 1.000041 |

| beta | 14.232511 | 5.335247 | 0.053187 | 5.901158 | 24.734979 | 12760.797477 | 1.000032 |

| theta__0 | 0.062545 | 0.041190 | 0.000155 | 0.001426 | 0.142216 | 57114.307338 | 0.999986 |

| theta__1 | 0.062545 | 0.041059 | 0.000168 | 0.000175 | 0.140762 | 61814.151395 | 0.999987 |

| theta__2 | 0.062449 | 0.041228 | 0.000180 | 0.000782 | 0.141716 | 58898.117106 | 0.999986 |

| theta__3 | 0.062585 | 0.041198 | 0.000179 | 0.000580 | 0.141435 | 58406.712770 | 0.999987 |

| theta__4 | 0.062153 | 0.040964 | 0.000176 | 0.000585 | 0.141045 | 60698.101006 | 1.000010 |

| theta__5 | 0.062497 | 0.041606 | 0.000164 | 0.000292 | 0.142410 | 63046.941193 | 0.999993 |

| theta__6 | 0.062770 | 0.041512 | 0.000187 | 0.000105 | 0.141784 | 63023.817321 | 0.999987 |

| theta__7 | 0.064208 | 0.042452 | 0.000168 | 0.000571 | 0.145774 | 61077.079804 | 0.999986 |

| theta__8 | 0.064273 | 0.042562 | 0.000181 | 0.000700 | 0.146872 | 57544.041581 | 0.999992 |

| theta__9 | 0.064479 | 0.042579 | 0.000186 | 0.000359 | 0.146103 | 64880.809084 | 0.999986 |

| theta__10 | 0.064248 | 0.042197 | 0.000180 | 0.000564 | 0.145700 | 62979.973136 | 1.000004 |

| theta__11 | 0.066199 | 0.043631 | 0.000201 | 0.000633 | 0.149525 | 60156.065174 | 0.999999 |

| theta__12 | 0.066337 | 0.043481 | 0.000172 | 0.001097 | 0.150348 | 64173.087755 | 0.999986 |

| theta__13 | 0.068089 | 0.044675 | 0.000193 | 0.000411 | 0.153202 | 60800.136182 | 1.000011 |

| theta__14 | 0.090601 | 0.047484 | 0.000176 | 0.010498 | 0.181864 | 86662.352407 | 0.999986 |

| theta__15 | 0.090951 | 0.048220 | 0.000165 | 0.009726 | 0.184524 | 83324.203128 | 1.000006 |

| theta__16 | 0.090458 | 0.047725 | 0.000177 | 0.010900 | 0.183688 | 79788.348745 | 1.000009 |

| theta__17 | 0.090624 | 0.048168 | 0.000196 | 0.010582 | 0.184257 | 83635.915830 | 0.999986 |

| theta__18 | 0.093077 | 0.048915 | 0.000165 | 0.011609 | 0.188544 | 87567.638908 | 0.999986 |

| theta__19 | 0.093323 | 0.049052 | 0.000158 | 0.009923 | 0.187825 | 85158.958841 | 0.999988 |

| theta__20 | 0.095923 | 0.050479 | 0.000169 | 0.012296 | 0.195612 | 90532.353113 | 0.999987 |

| theta__21 | 0.095991 | 0.050579 | 0.000175 | 0.011063 | 0.194170 | 81859.084380 | 0.999986 |

| theta__22 | 0.122798 | 0.049803 | 0.000157 | 0.034500 | 0.220019 | 95776.052091 | 0.999995 |

| theta__23 | 0.104218 | 0.047498 | 0.000164 | 0.023454 | 0.199208 | 84808.659289 | 1.000001 |

| theta__24 | 0.106730 | 0.048697 | 0.000185 | 0.023302 | 0.203106 | 94290.434437 | 0.999991 |

| theta__25 | 0.109694 | 0.050011 | 0.000162 | 0.022267 | 0.207214 | 102235.578657 | 0.999986 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| theta__40 | 0.146865 | 0.058558 | 0.000183 | 0.041777 | 0.261984 | 103652.372571 | 0.999986 |

| theta__41 | 0.147525 | 0.065778 | 0.000207 | 0.030102 | 0.274830 | 105007.444810 | 0.999986 |

| theta__42 | 0.176222 | 0.047421 | 0.000175 | 0.086746 | 0.269802 | 79145.084384 | 1.000013 |

| theta__43 | 0.185808 | 0.047745 | 0.000169 | 0.099794 | 0.283515 | 82452.543457 | 0.999986 |

| theta__44 | 0.174455 | 0.063212 | 0.000161 | 0.060966 | 0.300280 | 101300.501555 | 0.999997 |

| theta__45 | 0.174581 | 0.063210 | 0.000230 | 0.062059 | 0.301569 | 95290.715514 | 0.999986 |

| theta__46 | 0.174436 | 0.063561 | 0.000197 | 0.058815 | 0.298835 | 90052.314424 | 0.999986 |

| theta__47 | 0.174391 | 0.063421 | 0.000225 | 0.059492 | 0.299640 | 90037.774671 | 1.000008 |

| theta__48 | 0.174598 | 0.063581 | 0.000213 | 0.063073 | 0.304042 | 101955.788989 | 1.000021 |

| theta__49 | 0.174643 | 0.063113 | 0.000226 | 0.059909 | 0.298779 | 91349.607255 | 1.000003 |

| theta__50 | 0.174376 | 0.063243 | 0.000188 | 0.061179 | 0.300758 | 88346.091753 | 1.000005 |

| theta__51 | 0.192097 | 0.049554 | 0.000171 | 0.100407 | 0.291313 | 96696.415050 | 0.999998 |

| theta__52 | 0.179781 | 0.065145 | 0.000211 | 0.062991 | 0.309483 | 85222.955448 | 0.999987 |

| theta__53 | 0.179466 | 0.064806 | 0.000202 | 0.064987 | 0.310693 | 92152.363771 | 0.999987 |

| theta__54 | 0.179614 | 0.064603 | 0.000202 | 0.062636 | 0.307698 | 93720.581239 | 1.000077 |

| theta__55 | 0.191451 | 0.063981 | 0.000211 | 0.073988 | 0.317621 | 93847.064895 | 0.999986 |

| theta__56 | 0.213938 | 0.052120 | 0.000174 | 0.114844 | 0.316490 | 92842.549308 | 0.999987 |

| theta__57 | 0.219551 | 0.051596 | 0.000199 | 0.121534 | 0.320302 | 71468.589126 | 1.000073 |

| theta__58 | 0.202631 | 0.067303 | 0.000233 | 0.078683 | 0.335506 | 79052.827788 | 0.999995 |

| theta__59 | 0.202387 | 0.067428 | 0.000223 | 0.077808 | 0.335611 | 78964.861693 | 0.999987 |

| theta__60 | 0.212595 | 0.066060 | 0.000234 | 0.092429 | 0.344337 | 86759.061230 | 0.999992 |

| theta__61 | 0.208208 | 0.069223 | 0.000268 | 0.082436 | 0.345336 | 79764.736883 | 1.000003 |

| theta__62 | 0.218662 | 0.067560 | 0.000229 | 0.094363 | 0.352974 | 77136.531129 | 0.999986 |

| theta__63 | 0.230253 | 0.071304 | 0.000256 | 0.098650 | 0.372085 | 80093.322450 | 0.999991 |

| theta__64 | 0.230473 | 0.070972 | 0.000244 | 0.100047 | 0.370713 | 81977.147169 | 0.999986 |

| theta__65 | 0.230547 | 0.071464 | 0.000285 | 0.100718 | 0.374298 | 74543.109055 | 1.000055 |

| theta__66 | 0.268448 | 0.054589 | 0.000191 | 0.164719 | 0.375830 | 77466.938358 | 0.999995 |

| theta__67 | 0.278563 | 0.057947 | 0.000238 | 0.168726 | 0.392856 | 67498.481305 | 0.999992 |

| theta__68 | 0.274266 | 0.057061 | 0.000196 | 0.167632 | 0.388138 | 75987.954939 | 0.999991 |

| theta__69 | 0.282718 | 0.073329 | 0.000329 | 0.148560 | 0.432725 | 60596.486567 | 0.999994 |

74 rows × 7 columns

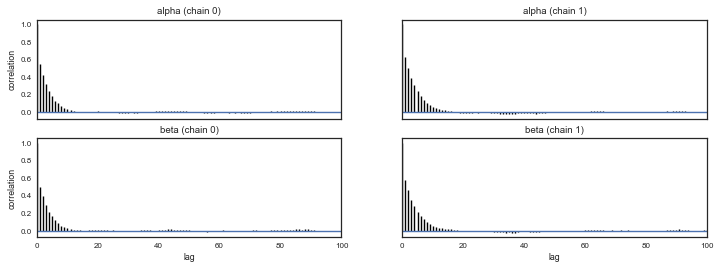

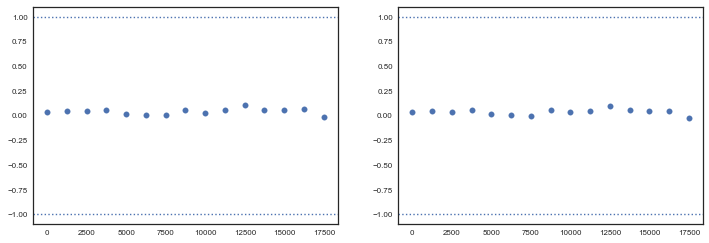

pm.autocorrplot(tt, varnames=['alpha','beta'])

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x18c574d30>,

<matplotlib.axes._subplots.AxesSubplot object at 0x18c56d7b8>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x1965c1b38>,

<matplotlib.axes._subplots.AxesSubplot object at 0x1965b6eb8>]], dtype=object)

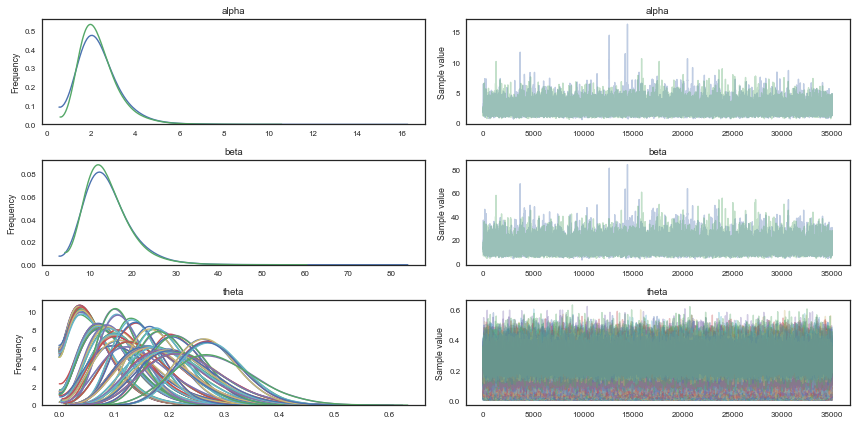

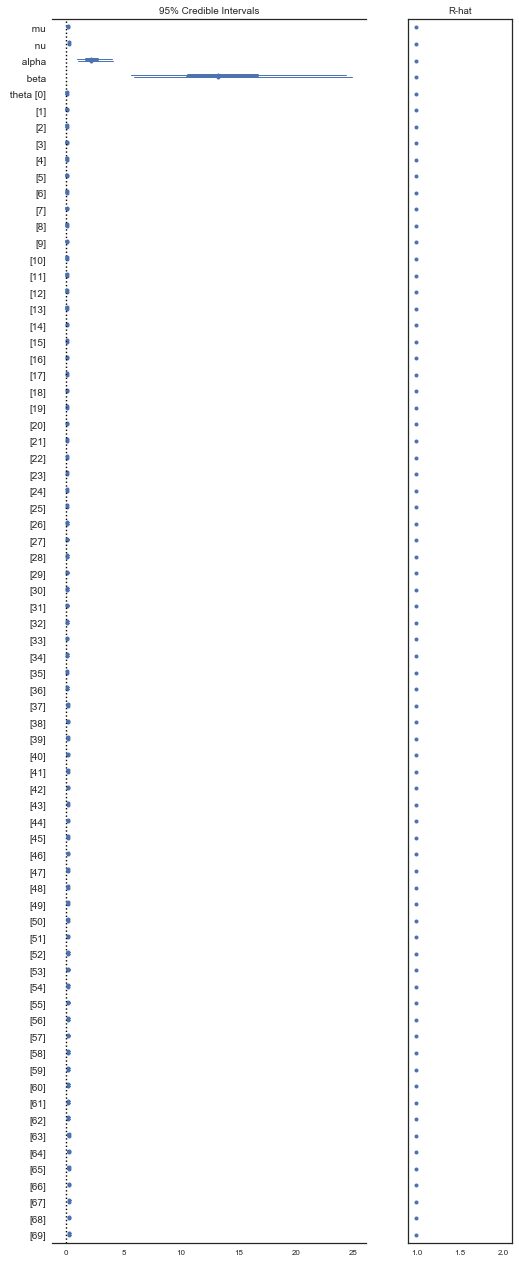

from pymc3 import traceplot

#traceplot(bioassay_trace[500:], varnames=['alpha'])

traceplot(tt, varnames=['alpha','beta','theta']);

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_base.py:3449: MatplotlibDeprecationWarning:

The `ymin` argument was deprecated in Matplotlib 3.0 and will be removed in 3.2. Use `bottom` instead.

alternative='`bottom`', obj_type='argument')

fig = plt.subplots(1, 2, figsize=(12, 5))

plt.subplot(1,2,1)

plt.plot(tt['alpha'], tt['beta'],'.', alpha=0.1)

sns.kdeplot(tt['alpha'], tt['beta'])

plt.xlabel(r"$\alpha$",size=20)

plt.ylabel(r"$\beta$",size=20)

plt.subplot(1,2,2)

plt.plot(np.log(tt['alpha']/tt['beta']), np.log(tt['alpha']+tt['beta']),'.', alpha=0.1)

sns.kdeplot(np.log(tt['alpha']/tt['beta']), np.log(tt['alpha']+tt['beta']))

plt.xlabel(r"$\log(\alpha/\beta)$",size=20)

plt.ylabel(r"$\log(\alpha+\beta)$",size=20)

Text(0, 0.5, '$\\log(\\alpha+\\beta)$')

Note the advantage of using gradients for sampling (stay tuned for Hamiltonian Monte Carlo). We need way less samples to converge to a similar result as with Metropolis, and autocorrelation plots look beter. Let us move to checking convergence for the NUTS sampler, using the Geweke diagnostic. It is important to check that both $\alpha$ and $\beta$ has converged.

tt.varnames

['mu_interval__',

'nu_interval__',

'theta_logodds__',

'mu',

'nu',

'alpha',

'beta',

'theta']

pm.plot_posterior(tt, varnames=['alpha', 'beta'])

array([<matplotlib.axes._subplots.AxesSubplot object at 0x17fce90f0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x1086839b0>], dtype=object)

plt.figure(figsize=(8, 20))

pm.forestplot(tt, );

from pymc3 import geweke

z1 = geweke(tt, intervals=15)[0]

z2 = geweke(tt, intervals=15)[1]

tt.get_values('alpha', chains=0).shape

(35000,)

fig = plt.subplots(1, 2, figsize=(12, 4))

plt.subplot(1,2,1)

plt.scatter(*z1['alpha'].T)

plt.axhline(-1, 0, 1, linestyle='dotted')

plt.axhline(1, 0, 1, linestyle='dotted')

plt.subplot(1,2,2)

plt.scatter(*z1['beta'].T)

plt.axhline(-1, 0, 1, linestyle='dotted')

plt.axhline(1, 0, 1, linestyle='dotted')

<matplotlib.lines.Line2D at 0x18db456a0>

from pymc3 import sample_ppc

with tumor_model:

tumor_sim = sample_ppc(tt, samples=500)

100%|██████████| 500/500 [00:03<00:00, 138.18it/s]

tumor_sim['obs_deaths'].T[59].shape

(500,)

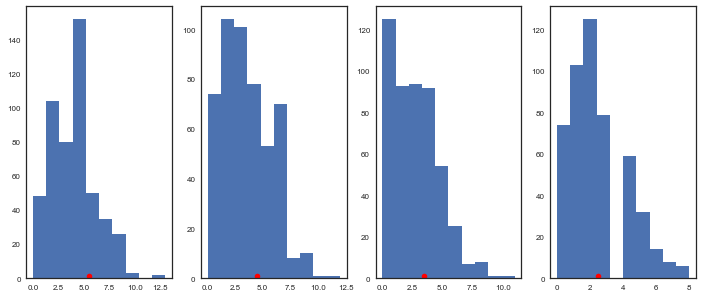

Let’s plot a few of the posterior predictives and the observed data:

fig = plt.subplots(1, 4, figsize=(12, 5))

plt.subplot(1,4,1)

plt.hist(tumor_sim['obs_deaths'].T[59])

plt.plot(tumory[59]+0.5, 1, 'ro')

plt.subplot(1,4,2)

plt.hist(tumor_sim['obs_deaths'].T[49])

plt.plot(tumory[49]+0.5, 1, 'ro')

plt.subplot(1,4,3)

plt.hist(tumor_sim['obs_deaths'].T[39])

plt.plot(tumory[39]+0.5, 1, 'ro')

plt.subplot(1,4,4)

plt.hist(tumor_sim['obs_deaths'].T[29])

plt.plot(tumory[29]+0.5, 1, 'ro')

[<matplotlib.lines.Line2D at 0x19942e198>]

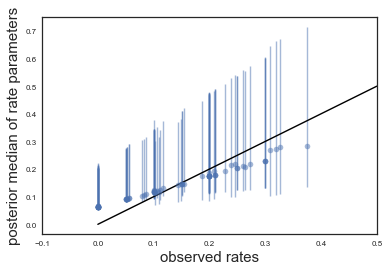

A more meaningful plot is the observed tumor rates on the x-axis against posterior medians for each of the 70 $\theta$’s on the y axis, along with error bars obtained from finding the 2.5 and 97.5 percentiles. With df_summary we can get the summary with the means and the percentiles directly into a pandas dataframe:

from pymc3 import summary

df_sum = summary(tt, varnames=['theta'])

df_sum.head()

| mean | sd | mc_error | hpd_2.5 | hpd_97.5 | n_eff | Rhat | |

|---|---|---|---|---|---|---|---|

| theta__0 | 0.062545 | 0.041190 | 0.000155 | 0.001426 | 0.142216 | 57114.307338 | 0.999986 |

| theta__1 | 0.062545 | 0.041059 | 0.000168 | 0.000175 | 0.140762 | 61814.151395 | 0.999987 |

| theta__2 | 0.062449 | 0.041228 | 0.000180 | 0.000782 | 0.141716 | 58898.117106 | 0.999986 |

| theta__3 | 0.062585 | 0.041198 | 0.000179 | 0.000580 | 0.141435 | 58406.712770 | 0.999987 |

| theta__4 | 0.062153 | 0.040964 | 0.000176 | 0.000585 | 0.141045 | 60698.101006 | 1.000010 |

medianthetas = df_sum['mean'].values

lowerthetas = df_sum['hpd_2.5'].values

upperthetas = df_sum['hpd_97.5'].values

elowertheta = medianthetas - lowerthetas

euppertheta = upperthetas - medianthetas

Our naive, non-Bayesian estimate of the probabilities would have been just the ratio of rats with tumor to total number of observed rats in each experiment:

ratios=tumory.astype(float)/tumorn

Now let us compare those naive estimates to our posterior estimates:

plt.errorbar(ratios,

medianthetas, yerr=[lowerthetas,upperthetas], fmt='o', alpha=0.5)

plt.plot([0,0.5],[0,0.5],'k-')

plt.xlabel("observed rates",size=15)

plt.ylabel("posterior median of rate parameters",size=15)

plt.xlim(-0.1,0.5)

(-0.1, 0.5)

Also see this problem in the pymc3 examples: https://docs.pymc.io/notebooks/GLM-hierarchical-binominal-model.html