Keywords: energy | hamiltonian monte carlo | nuts | leapfrog | canonical distribution | microcanonical distribution | transition distribution | marginal energy distribution | data augmentation | classical mechanics | detailed balance | statistical mechanics | divergences | step-size | non-centered hierarchical model | hierarchical | Download Notebook

Contents

- Centered parametrization

- The effect of step-size

- Non-centered paramerization

- Path length L

- Tuning HMC(NUTS)

- Efficiency of momentum resampling

%matplotlib inline

import numpy as np

import scipy as sp

import matplotlib as mpl

import matplotlib.cm as cm

import matplotlib.pyplot as plt

import pandas as pd

pd.set_option('display.width', 500)

pd.set_option('display.max_columns', 100)

pd.set_option('display.notebook_repr_html', True)

import seaborn as sns

sns.set_style("whitegrid")

sns.set_context("poster")

import pymc3 as pm

import arviz as az

Centered parametrization

We’ll set up the modelled in what is called a “Centered” parametrization which tells us how $\theta_i$ is modelled: it is written to be directly dependent as a normal distribution from the hyper-parameters.

J = 8

y = np.array([28, 8, -3, 7, -1, 1, 18, 12])

sigma = np.array([15, 10, 16, 11, 9, 11, 10, 18])

We set up our priors in a Hierarchical model to use this centered parametrization. We can say: the $\theta_j$ is drawn from a Normal hyper-prior distribution with parameters $\mu$ and $\tau$. Once we get a $\theta_j$ then can draw the means from it given the data $\sigma_j$ and one such draw corresponds to our data.

\[\mu \sim \mathcal{N}(0, 5)\\ \tau \sim \text{Half-Cauchy}(0, 5)\\ \theta_{j} \sim \mathcal{N}(\mu, \tau)\\ \bar{y_{j}} \sim \mathcal{N}(\theta_{j}, \sigma_{j})\]where $j \in {1, \ldots, 8 }$ and the ${ y_{j}, \sigma_{j} }$ are given as data

with pm.Model() as schools1:

mu = pm.Normal('mu', 0, sd=5)

tau = pm.HalfCauchy('tau', beta=5)

theta = pm.Normal('theta', mu=mu, sd=tau, shape=J)

obs = pm.Normal('obs', mu=theta, sd=sigma, observed=y)

with schools1:

trace1 = pm.sample(10000)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [theta, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [00:38<00:00, 543.35draws/s]

There were 192 divergences after tuning. Increase `target_accept` or reparameterize.

There were 152 divergences after tuning. Increase `target_accept` or reparameterize.

The number of effective samples is smaller than 10% for some parameters.

#TODO: ARVIZ, E-BFMI

pm.summary(trace1)

| mean | sd | mc_error | hpd_2.5 | hpd_97.5 | n_eff | Rhat | |

|---|---|---|---|---|---|---|---|

| mu | 4.421838 | 3.335100 | 0.076147 | -2.521747 | 10.889159 | 1666.484372 | 0.999995 |

| theta__0 | 6.607782 | 5.966534 | 0.098909 | -4.777644 | 18.768973 | 3882.800476 | 0.999965 |

| theta__1 | 5.116251 | 4.933157 | 0.080553 | -4.688147 | 15.092325 | 3583.157313 | 1.000047 |

| theta__2 | 3.829182 | 5.527924 | 0.095912 | -7.645811 | 14.453706 | 3228.973893 | 0.999984 |

| theta__3 | 4.825545 | 4.929238 | 0.078297 | -4.987601 | 14.733869 | 4188.067465 | 0.999956 |

| theta__4 | 3.478191 | 4.851977 | 0.092242 | -6.182416 | 13.371734 | 2511.345157 | 1.000152 |

| theta__5 | 4.023393 | 4.996055 | 0.082124 | -6.402197 | 13.759797 | 3842.954606 | 1.000052 |

| theta__6 | 6.660372 | 5.290873 | 0.092386 | -2.831968 | 18.095978 | 3666.135589 | 0.999959 |

| theta__7 | 4.880685 | 5.525661 | 0.079835 | -5.816082 | 16.624295 | 5084.452672 | 0.999955 |

| tau | 4.138336 | 3.127551 | 0.085624 | 0.639250 | 10.236035 | 1256.355294 | 0.999959 |

pm.trace_to_dataframe(trace1).corr()

| mu | theta__0 | theta__1 | theta__2 | theta__3 | theta__4 | theta__5 | theta__6 | theta__7 | tau | |

|---|---|---|---|---|---|---|---|---|---|---|

| mu | 1.000000 | 0.446534 | 0.542310 | 0.563762 | 0.547569 | 0.580491 | 0.565741 | 0.469521 | 0.544208 | -0.116377 |

| theta__0 | 0.446534 | 1.000000 | 0.336050 | 0.192429 | 0.309346 | 0.211380 | 0.225359 | 0.427040 | 0.273259 | 0.401492 |

| theta__1 | 0.542310 | 0.336050 | 1.000000 | 0.299167 | 0.308855 | 0.289636 | 0.289145 | 0.346080 | 0.321100 | 0.109375 |

| theta__2 | 0.563762 | 0.192429 | 0.299167 | 1.000000 | 0.300424 | 0.354484 | 0.336760 | 0.209144 | 0.314495 | -0.192125 |

| theta__3 | 0.547569 | 0.309346 | 0.308855 | 0.300424 | 1.000000 | 0.309420 | 0.330811 | 0.308911 | 0.316515 | 0.028588 |

| theta__4 | 0.580491 | 0.211380 | 0.289636 | 0.354484 | 0.309420 | 1.000000 | 0.351088 | 0.200968 | 0.312110 | -0.235397 |

| theta__5 | 0.565741 | 0.225359 | 0.289145 | 0.336760 | 0.330811 | 0.351088 | 1.000000 | 0.234143 | 0.292970 | -0.143010 |

| theta__6 | 0.469521 | 0.427040 | 0.346080 | 0.209144 | 0.308911 | 0.200968 | 0.234143 | 1.000000 | 0.278426 | 0.390982 |

| theta__7 | 0.544208 | 0.273259 | 0.321100 | 0.314495 | 0.316515 | 0.312110 | 0.292970 | 0.278426 | 1.000000 | 0.022809 |

| tau | -0.116377 | 0.401492 | 0.109375 | -0.192125 | 0.028588 | -0.235397 | -0.143010 | 0.390982 | 0.022809 | 1.000000 |

The Gelman-Rubin statistic seems fine, but notice how small the effective-n’s are? Something is not quite right. Lets see traceplots. Also notice the strong correlations between some $\theta$s and $\tau$ and some $\theta$s and $\mu$.

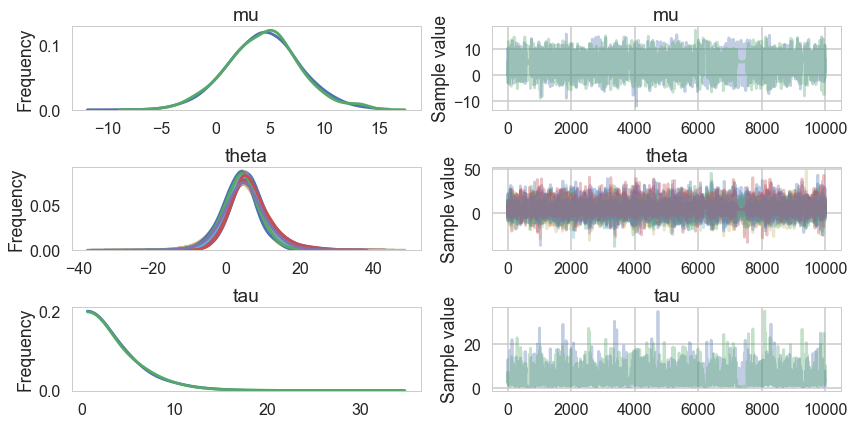

pm.traceplot(trace1);

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_base.py:3449: MatplotlibDeprecationWarning:

The `ymin` argument was deprecated in Matplotlib 3.0 and will be removed in 3.2. Use `bottom` instead.

alternative='`bottom`', obj_type='argument')

Its hard to pick the thetas out but $\tau$ looks not so white-noisy. Lets zoom in:

trace1.varnames

['mu', 'tau_log__', 'theta', 'tau']

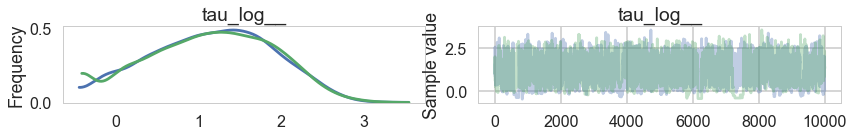

pm.traceplot(trace1, varnames=['tau_log__'])

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_base.py:3449: MatplotlibDeprecationWarning:

The `ymin` argument was deprecated in Matplotlib 3.0 and will be removed in 3.2. Use `bottom` instead.

alternative='`bottom`', obj_type='argument')

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x12138a6a0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x1213accc0>]], dtype=object)

There seems to be some stickiness at lower values in the trace. Zooming in even more helps us see this better:

plt.plot(trace1['tau_log__'], alpha=0.6)

plt.axvline(10000, color="r")

#plt.plot(short_trace['tau_log_'][5000:], alpha=0.6);

<matplotlib.lines.Line2D at 0x12138a5f8>

Tracking divergences

divergent = trace1['diverging']

divergent

array([False, False, False, ..., False, False, False], dtype=bool)

print('Number of Divergent %d' % divergent.nonzero()[0].size)

divperc = divergent.nonzero()[0].size/len(trace1)

print('Percentage of Divergent %.5f' % divperc)

Number of Divergent 344

Percentage of Divergent 0.03440

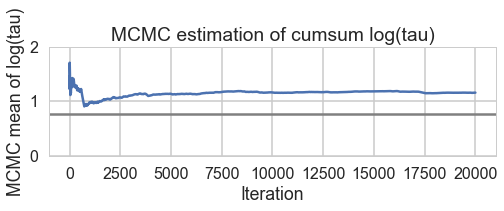

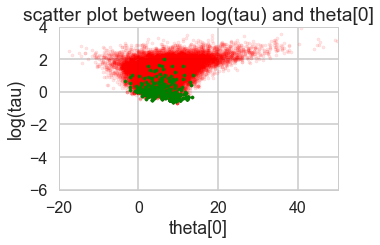

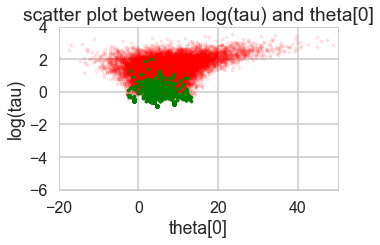

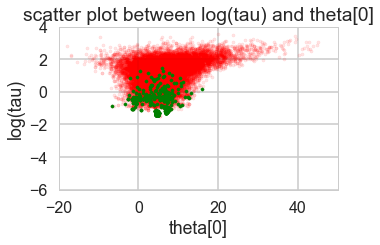

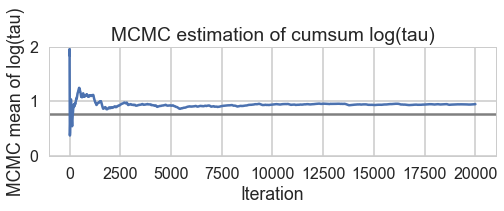

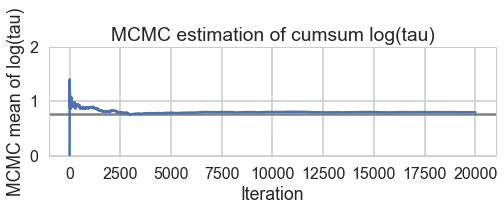

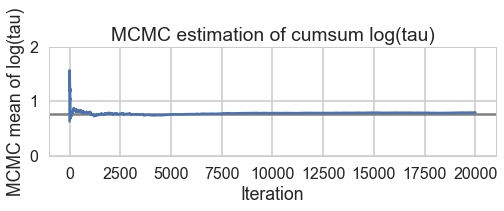

def biasplot(trace):

logtau = trace['tau_log__']

mlogtau = [np.mean(logtau[:i]) for i in np.arange(1, len(logtau))]

plt.figure(figsize=(8, 2))

plt.axhline(0.7657852, lw=2.5, color='gray')

plt.plot(mlogtau, lw=2.5)

plt.ylim(0, 2)

plt.xlabel('Iteration')

plt.ylabel('MCMC mean of log(tau)')

plt.title('MCMC estimation of cumsum log(tau)')

biasplot(trace1)

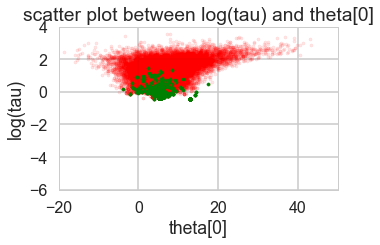

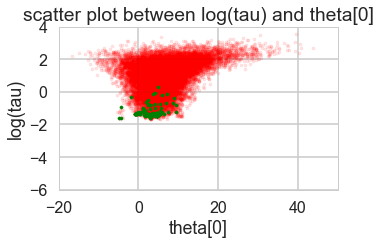

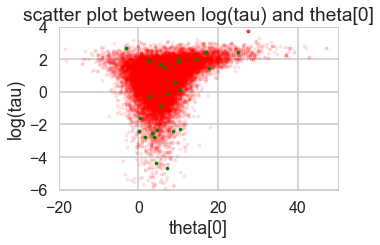

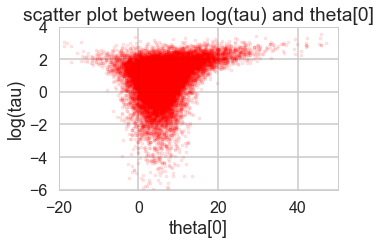

def funnelplot(trace):

logtau = trace['tau_log__']

divergent = trace['diverging']

theta_trace = trace['theta']

theta0 = theta_trace[:, 0]

plt.figure(figsize=(5, 3))

plt.scatter(theta0[divergent == 0], logtau[divergent == 0], s=10, color='r', alpha=0.1)

plt.scatter(theta0[divergent == 1], logtau[divergent == 1], s=10, color='g')

plt.axis([-20, 50, -6, 4])

plt.ylabel('log(tau)')

plt.xlabel('theta[0]')

plt.title('scatter plot between log(tau) and theta[0]')

plt.show()

funnelplot(trace1)

You can also get an idea of your acceptance rate. 65% is decent for NUTS.

np.mean(trace1['mean_tree_accept'])

0.74708717366214583

azdata1 = az.from_pymc3(

trace=trace1)

az.bfmi(azdata1.sample_stats.energy)

array([ 0.27481475, 0.28938651])

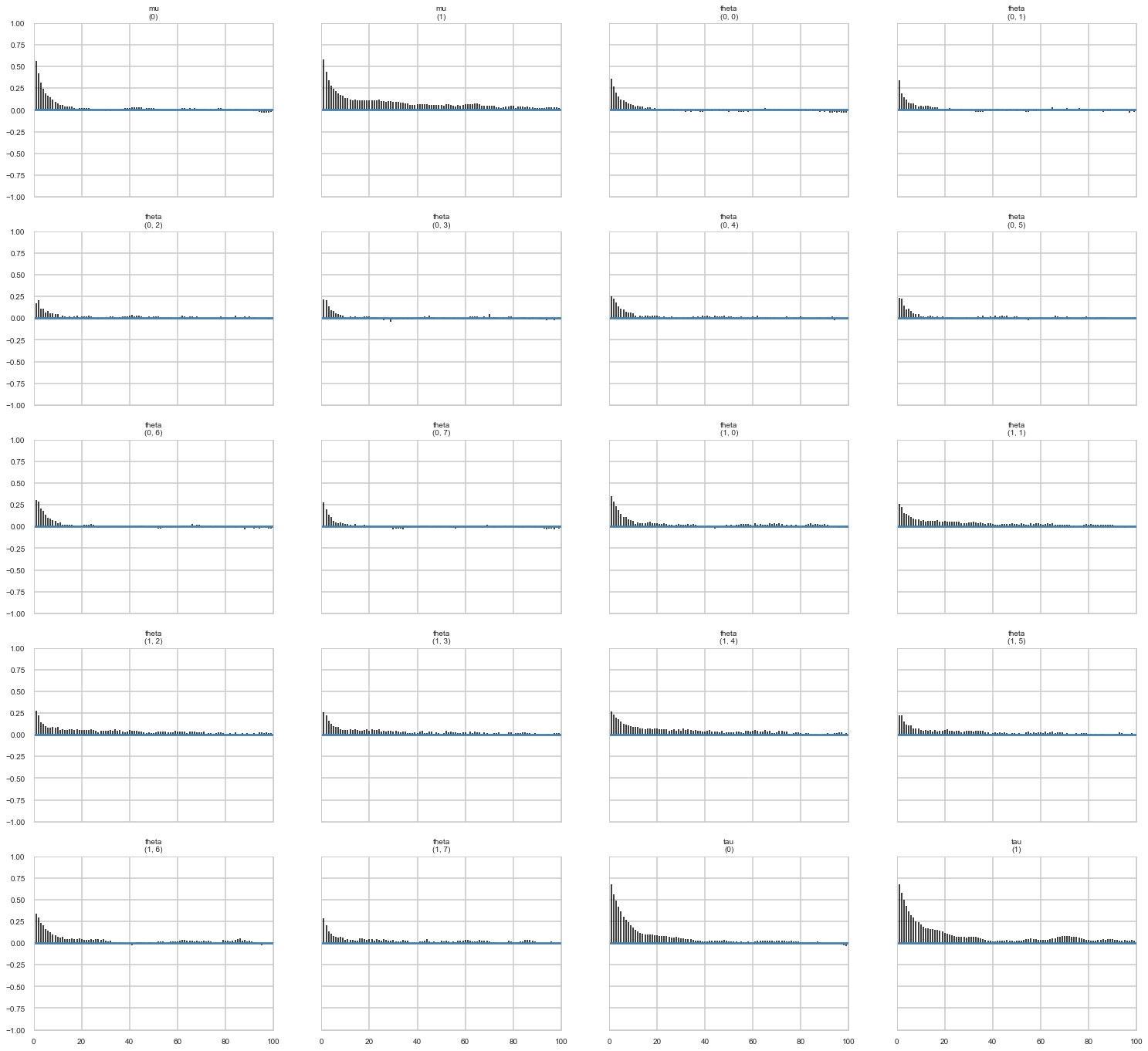

az.plot_autocorr(azdata1);

Where are the divergences coming from?

Divergences can be a sign of the symplectic integration going off to infinity, or a false positive. False positives occur because instead of waiting for infinity, some heuristics are used. This is typically true of divergences not deep in the funnel, where the curvature of the target distribution is high.

The effect of step-size

Looking at the docs for the NUTS sampler at https://pymc-devs.github.io/pymc3/api/inference.html#module-pymc3.step_methods.hmc.nuts , we see that we can co-erce a smaller step-size $\epsilon$, and thus an ok symplectic integration from our sampler by increasing the target acceptance rate.

If we do this, then we have geometric ergodicity (we go everywhere!) between the Hamiltonian transitions (ie in the leapfrogs) and the target distribution. This should result in the divergence rate decreasing.

But if for some reason we do not have geometric ergodicity, then divergences will persist. This can happen deep in the funnel, where even drastic decreases in the step size are not able to explore the highly curved geometry.

with schools1:

step = pm.NUTS(target_accept=.85)

trace1_85 = pm.sample(10000, step=step)

with schools1:

step = pm.NUTS(target_accept=.90)

trace1_90 = pm.sample(10000, step=step)

with schools1:

step = pm.NUTS(target_accept=.95)

trace1_95 = pm.sample(10000, step=step)

with schools1:

step = pm.NUTS(target_accept=.99)

trace1_99 = pm.sample(10000, step=step)

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [theta, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [00:32<00:00, 640.18draws/s]

There were 228 divergences after tuning. Increase `target_accept` or reparameterize.

There were 602 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.593123095327, but should be close to 0.85. Try to increase the number of tuning steps.

The estimated number of effective samples is smaller than 200 for some parameters.

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [theta, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [00:35<00:00, 598.26draws/s]

There were 838 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.70706900873, but should be close to 0.9. Try to increase the number of tuning steps.

There were 284 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.658746391353, but should be close to 0.9. Try to increase the number of tuning steps.

The number of effective samples is smaller than 10% for some parameters.

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [theta, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [01:22<00:00, 253.67draws/s]

There were 206 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.872542834364, but should be close to 0.95. Try to increase the number of tuning steps.

There were 190 divergences after tuning. Increase `target_accept` or reparameterize.

The number of effective samples is smaller than 10% for some parameters.

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [theta, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [03:31<00:00, 99.48draws/s]

There were 24 divergences after tuning. Increase `target_accept` or reparameterize.

There were 89 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.9516802872, but should be close to 0.99. Try to increase the number of tuning steps.

The number of effective samples is smaller than 10% for some parameters.

for t in [trace1_85, trace1_90, trace1_95, trace1_99]:

print("Acceptance", np.mean(t['mean_tree_accept']), "Step Size", np.mean(t['step_size']), "Divergence", np.sum(t['diverging']))

Acceptance 0.692500227779 Step Size 0.247497861579 Divergence 830

Acceptance 0.682924981865 Step Size 0.172543729336 Divergence 1123

Acceptance 0.893198124991 Step Size 0.101784236938 Divergence 396

Acceptance 0.964135746997 Step Size 0.0653667162649 Divergence 113

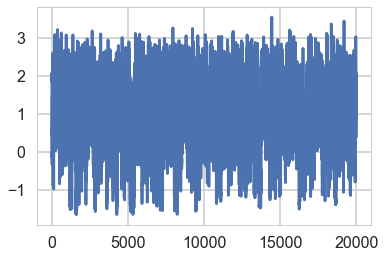

for t in [trace1_85, trace1_90, trace1_95, trace1_99]:

biasplot(t)

funnelplot(t)

plt.plot(trace1_99['tau_log__'])

[<matplotlib.lines.Line2D at 0x121b72278>]

df99 = pm.trace_to_dataframe(trace1_99)

df99.head()

| mu | theta__0 | theta__1 | theta__2 | theta__3 | theta__4 | theta__5 | theta__6 | theta__7 | tau | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 7.693776 | 14.477088 | 10.353145 | 18.408850 | 0.004432 | 8.185481 | 13.967940 | 8.531192 | 9.507294 | 6.308449 |

| 1 | 6.407051 | 0.709888 | 16.086012 | 2.926935 | 10.425556 | 7.994480 | 3.554593 | 18.974226 | 13.514982 | 8.005093 |

| 2 | 4.084759 | 8.556587 | 1.263262 | 9.449619 | 4.694946 | 5.025554 | 6.031463 | 1.203137 | 2.035244 | 2.936942 |

| 3 | 6.586736 | 8.860204 | 6.887298 | 5.738129 | 5.422712 | 4.859962 | 5.804170 | 7.349872 | 6.061337 | 2.313194 |

| 4 | 5.967213 | 7.087187 | 7.038952 | 5.864002 | 7.126083 | 4.261743 | 6.588078 | 9.380298 | 4.836616 | 1.379508 |

df99.corr()

| mu | theta__0 | theta__1 | theta__2 | theta__3 | theta__4 | theta__5 | theta__6 | theta__7 | tau | |

|---|---|---|---|---|---|---|---|---|---|---|

| mu | 1.000000 | 0.499408 | 0.578907 | 0.598298 | 0.584340 | 0.586283 | 0.599065 | 0.503385 | 0.573302 | -0.080196 |

| theta__0 | 0.499408 | 1.000000 | 0.373228 | 0.239095 | 0.334952 | 0.214745 | 0.272826 | 0.461365 | 0.341573 | 0.398513 |

| theta__1 | 0.578907 | 0.373228 | 1.000000 | 0.336129 | 0.374738 | 0.342857 | 0.356699 | 0.374444 | 0.371255 | 0.101561 |

| theta__2 | 0.598298 | 0.239095 | 0.336129 | 1.000000 | 0.342234 | 0.379636 | 0.375234 | 0.258921 | 0.329257 | -0.165129 |

| theta__3 | 0.584340 | 0.334952 | 0.374738 | 0.342234 | 1.000000 | 0.348511 | 0.376360 | 0.354740 | 0.365683 | 0.054737 |

| theta__4 | 0.586283 | 0.214745 | 0.342857 | 0.379636 | 0.348511 | 1.000000 | 0.370492 | 0.222877 | 0.330311 | -0.226055 |

| theta__5 | 0.599065 | 0.272826 | 0.356699 | 0.375234 | 0.376360 | 0.370492 | 1.000000 | 0.286706 | 0.349603 | -0.122573 |

| theta__6 | 0.503385 | 0.461365 | 0.374444 | 0.258921 | 0.354740 | 0.222877 | 0.286706 | 1.000000 | 0.351618 | 0.405648 |

| theta__7 | 0.573302 | 0.341573 | 0.371255 | 0.329257 | 0.365683 | 0.330311 | 0.349603 | 0.351618 | 1.000000 | 0.093862 |

| tau | -0.080196 | 0.398513 | 0.101561 | -0.165129 | 0.054737 | -0.226055 | -0.122573 | 0.405648 | 0.093862 | 1.000000 |

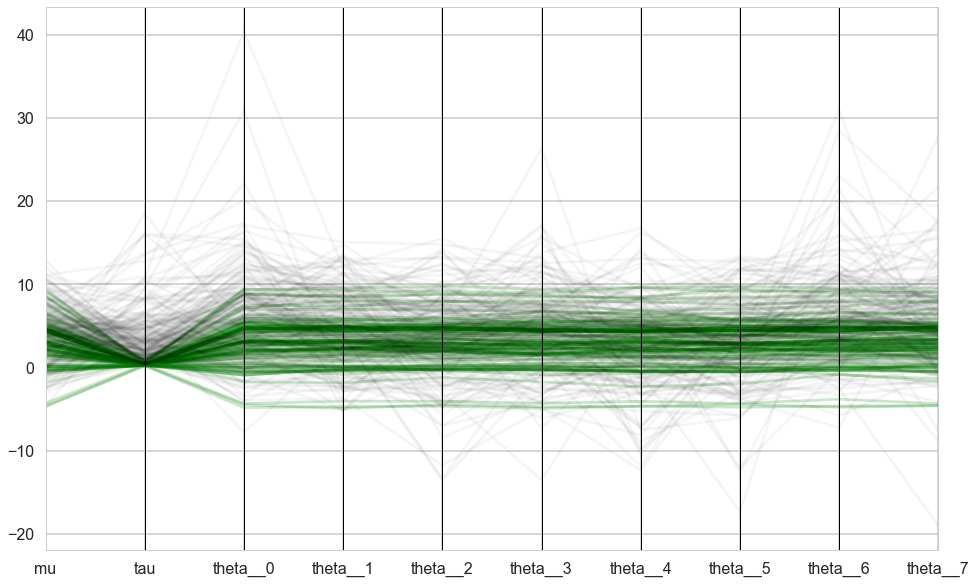

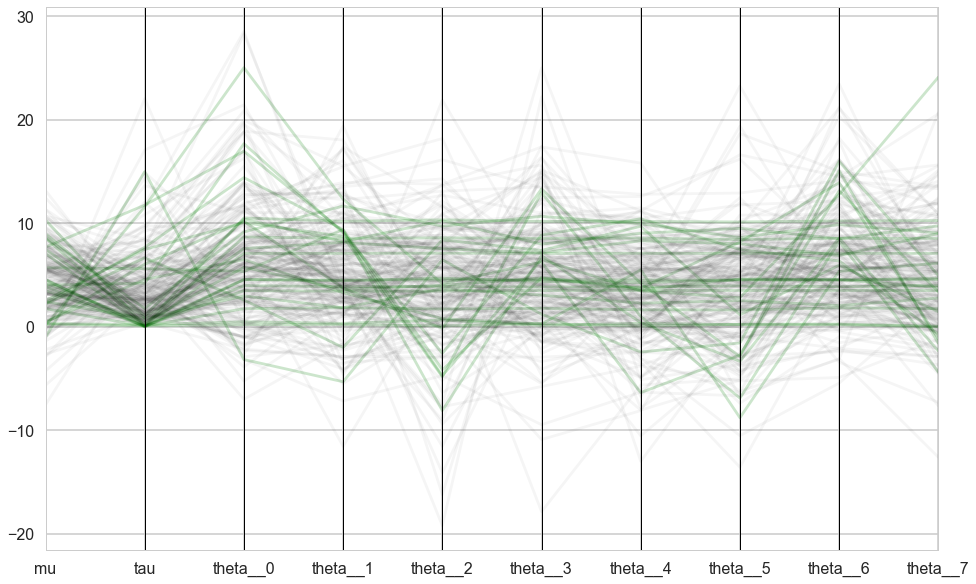

from itertools import cycle, islice

from pandas.plotting import parallel_coordinates as pc

def pcplot(t):

dvs = sum(t['diverging']==True)

dft = pm.trace_to_dataframe(t)

dftsi = dft.reset_index()

plt.figure(figsize=(16, 10))

orderedticks = ['index', 'mu', 'tau']+["theta__{}".format(i) for i in range(8)]

div_colors = list(islice(cycle(['g', 'g', 'g', 'g', 'g']), None, int(dvs)))

pc(dftsi[t['diverging']==True][orderedticks], 'index', color=div_colors, alpha=0.2);

undiv_colors = list(islice(cycle(['k', 'k', 'k', 'k', 'k']), None, 200))

pc(dftsi[t['diverging']==False].sample(200)[orderedticks], 'index', color=undiv_colors, alpha=0.04);

plt.gca().legend_.remove()

pcplot(trace1_99)

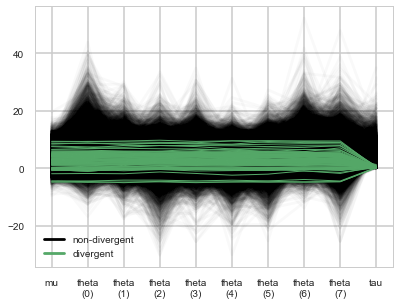

az.plot_parallel(az.from_pymc3(trace=trace1_99))

<matplotlib.axes._subplots.AxesSubplot at 0x123be30b8>

The divergences decrease, but dont totally go away, showing that we have lost some geometric ergodicity. And as we get to a very small step size we explore the funnel much better, but we are now taking our sampler more into a MH like random walk regime, and our sampler looks very strongly autocorrelated.

We know the fix, it is to move to a

Non-centered paramerization

We change our model to:

\[\mu \sim \mathcal{N}(0, 5)\\ \tau \sim \text{Half-Cauchy}(0, 5)\\ \nu_{j} \sim \mathcal{N}(0, 1)\\ \theta_{j} = \mu + \tau\nu_j \\ \bar{y_{j}} \sim \mathcal{N}(\theta_{j}, \sigma_{j})\]Notice how we have factored the dependency of $\theta$ on $\phi = \mu, \tau$ into a deterministic transformation between the layers, leaving the actively sampled variables uncorrelated.

This does two things for us: it reduces steepness and curvature, making for better stepping. It also reduces the strong change in densities, and makes sampling from the transition distribution easier.

with pm.Model() as schools2:

mu = pm.Normal('mu', mu=0, sd=5)

tau = pm.HalfCauchy('tau', beta=5)

nu = pm.Normal('nu', mu=0, sd=1, shape=J)

theta = pm.Deterministic('theta', mu + tau * nu)

obs = pm.Normal('obs', mu=theta, sd=sigma, observed=y)

with schools2:

trace2 = pm.sample(10000)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [nu, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [00:29<00:00, 723.95draws/s]

There were 12 divergences after tuning. Increase `target_accept` or reparameterize.

There were 13 divergences after tuning. Increase `target_accept` or reparameterize.

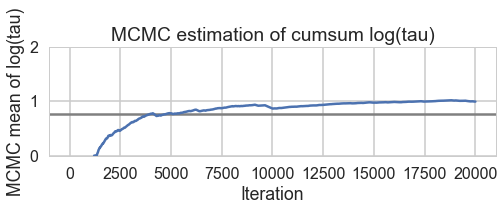

And we reach the true value better as the number of samples increases, decreasing our bias

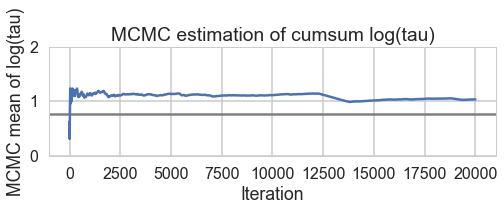

biasplot(trace2)

How about our divergences? They have decreased too.

divergent = trace2['diverging']

print('Number of Divergent %d' % divergent.nonzero()[0].size)

divperc = divergent.nonzero()[0].size/len(trace2)

print('Percentage of Divergent %.5f' % divperc)

Number of Divergent 25

Percentage of Divergent 0.00250

funnelplot(trace2)

pcplot(trace2)

The divergences are infrequent and do not seem to concentrate anywhere, indicative of false positives. Lowering the step size should make them go away.

A smaller step size

with schools2:

step = pm.NUTS(target_accept=.95)

trace2_95 = pm.sample(10000, step=step, init="jitter+adapt_diag")

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [nu, tau, mu]

Sampling 2 chains: 100%|██████████| 21000/21000 [00:58<00:00, 357.60draws/s]

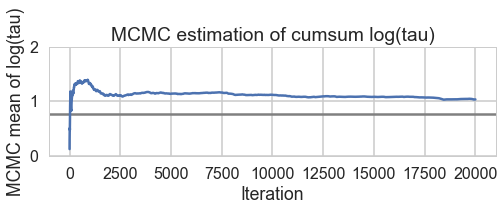

biasplot(trace2_95)

funnelplot(trace2_95)

Indeed at a smaller step-size our false-positive divergences go away, and the lower curvature in our parametrization ensures geometric ergodicity deep in our funnel

prior = pm.sample_prior_predictive(model=schools2)

posterior_predictive = pm.sample_ppc(trace2_95, 500, schools2)

100%|██████████| 500/500 [00:00<00:00, 1152.89it/s]

azdatagood = az.from_pymc3(

trace = trace2_95,

prior=prior,

posterior_predictive = posterior_predictive )

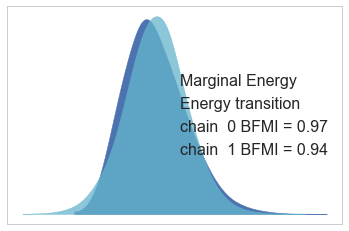

az.bfmi(azdatagood.sample_stats.energy)

array([ 0.96607181, 0.94140452])

Path length L

If we choose too small a $L$ we are returning our HMC sampler to a random walk. How long must a leapfrog run explore a level set of the Hamiltonian (ie of the canonical distribution $p(p,q)$ beofre we force an accept-reject step and a momentum resample?

Clearly if we go too long we’ll be coming back to the neighborhood of areas we might have reached in smaller trajectories. NUTS is one approach to adaptively fix this by not letting trajectories turn on themselves.

In the regular HMC sampler, for slightly complex problems, $L=100$ maybe a good place to start. For a fixed step-size $\epsilon$, we can now check the level of autocorrelation. If it is too much, we want a larger $L$.

Now, the problem with a fixed $L$ is that one $L$ does not work everywhere in a distribution. To see this, note that tails correspond to much higher energies. Thus the level-set surfaces are larger, and a fixed length $L$ trajectory only explores a small portion of this set before a momentum resampling takes us off. This is why a dynamic method like NUTS is a better choice.

Tuning HMC(NUTS)

This requires preliminary runs. In pymc3 some samples are dedicated to this, and an adaptive tuning is carried out according to algorithm 6 in the original NUTS paper: http://www.stat.columbia.edu/~gelman/research/published/nuts.pdf .

But we have seem how to play with step-size within the context of the NUTS sampler, something which we might need to do for tetchy models. Clearly too large an $\epsilon$ can lead to loss of symplecticity. And too small a step-size will get us to a random walk for finite sized $L$. Clearly the adaptive approach in NUTS is a good idea.

In pymc3’s regular HMC sampler a knob step_rand is available which allows us a distribution to sample a step-size from. In the case that we are not adaptively tuning as in NUTS, allowing for this randomness allows for occasionally small values of $\epsilon$ even for large meaned distributions. Note that this should be done at the star of leapfrog, not in-between.

Another place where this is useful is where the exact solution to the Hamiltonian equations (gaussian distribution, harmonic oscillator hamiltonian resulting) has periodicity. If $L\epsilon$ is chosen to be $2\pi$ then our sampler will lack ergodicity. In such a case choose $\epsilon$ from a distribution (or for that matter, $L$).

Finally there are multiple ways to tweak the mass matrix. One might use a variational posterior to obtain a approximate covariance matrix for the target distribution $p(q)$. Or one could use the tuning samples for this purpose. But choosing the mass matrix as the inverse of the covariance matrix of the target is highly recommended, as it will maximally decorrelate parameters of the target distribution.

The covariance matrix also establishes a scale for each parameter. This scale can be used to tweak step size as well. Intuitively the variances are measures of curvature along a particular dimension, and choosing a stepsize in each parameter which accomodates this difference is likely to help symplecticity. I do not believe this optimization is available within pymc3. This does not mean you are out of luck: you could simply redefine the parameters in a scaled form.

If you are combining HMC with other samplers, such as MH for discrete parameters in a gibbs based conditional scheme, then you might prefer smaller $L$ parameters to allow for the other parameters to be updated faster.

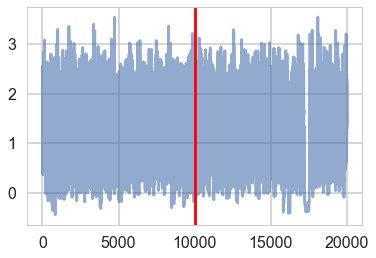

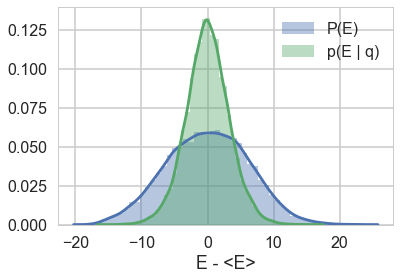

Efficiency of momentum resampling

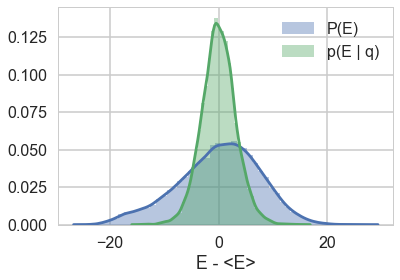

When we talked about the most Hamiltonian-trajectory momentum resampling, we talked about its efficiency. The point there was that you want the marginal energy distribution to match the transition distribution induced by momentum resampling.

pymc3 gives us some handy stats to calculate this:

def resample_plot(t):

sns.distplot(t['energy']-t['energy'].mean(), label="P(E)")

sns.distplot(np.diff(t['energy']), label = "p(E | q)")

plt.legend();

plt.xlabel("E - <E>")

So let us see this for our original trace

resample_plot(trace1);

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_axes.py:6499: MatplotlibDeprecationWarning:

The 'normed' kwarg was deprecated in Matplotlib 2.1 and will be removed in 3.1. Use 'density' instead.

alternative="'density'", removal="3.1")

Awful. The momentum resamples here will do a very slow job of traversing this distribution. This is indicative of the second issue we were having with this centered model (the first was a large step size for the curvature causing loss of symplectivity): the momentum resampling simply cannot provide enough energy to traverse the large energy changes that occur in this hierarchical model.

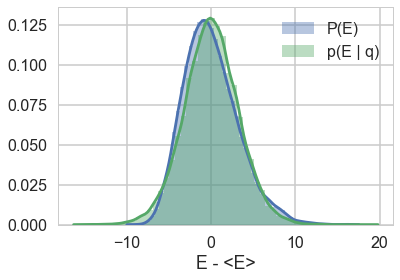

Note the caveat with such a plot obtained from our chains: it only tells us about the energies it explored: not the energies it ought to be exploring, as can be seen in the plot with trace1_99 below. Still, a great diagnostic.

resample_plot(trace1_99)

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_axes.py:6499: MatplotlibDeprecationWarning:

The 'normed' kwarg was deprecated in Matplotlib 2.1 and will be removed in 3.1. Use 'density' instead.

alternative="'density'", removal="3.1")

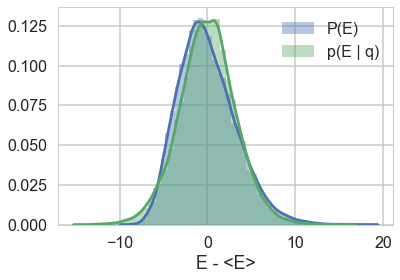

The match is much better for the non-centered version of our model.

resample_plot(trace2)

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_axes.py:6499: MatplotlibDeprecationWarning:

The 'normed' kwarg was deprecated in Matplotlib 2.1 and will be removed in 3.1. Use 'density' instead.

alternative="'density'", removal="3.1")

resample_plot(trace2_95)

//anaconda/envs/py3l/lib/python3.6/site-packages/matplotlib/axes/_axes.py:6499: MatplotlibDeprecationWarning:

The 'normed' kwarg was deprecated in Matplotlib 2.1 and will be removed in 3.1. Use 'density' instead.

alternative="'density'", removal="3.1")

az.plot_energy(azdatagood)

<matplotlib.axes._subplots.AxesSubplot at 0x132673438>

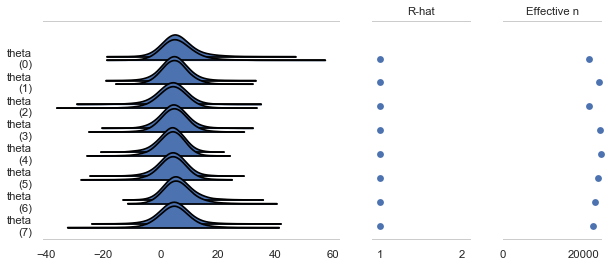

az.plot_forest(azdatagood, var_names=['theta'], kind='ridgeplot', combined=False, ridgeplot_overlap=3)

(<Figure size 720x288 with 3 Axes>,

array([<matplotlib.axes._subplots.AxesSubplot object at 0x13377add8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x133a29908>,

<matplotlib.axes._subplots.AxesSubplot object at 0x133a51dd8>], dtype=object))

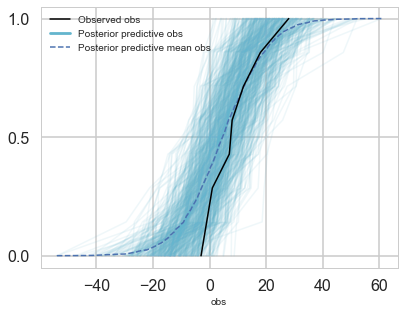

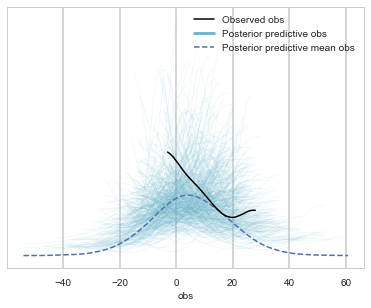

az.plot_ppc(azdatagood, alpha=0.1)

array([<matplotlib.axes._subplots.AxesSubplot object at 0x134176898>], dtype=object)

az.plot_ppc(azdatagood, kind='cumulative', alpha=0.1)

array([<matplotlib.axes._subplots.AxesSubplot object at 0x12265bef0>], dtype=object)